One of the biggest challenges with deploying large language models like ChatGPT in production today is their proclivity to hallucinate. Hallucination is basically 'making stuff up' - the models are trained on lots of text and try to predict the next token, and sometimes it makes a 'bad' prediction and outputs something that isn't true. In a way, everything useful that the models do is the result of a misprediction -- purely regurgitating text that it has seen before is not that useful. It's very difficult for us, as a result, to understand if a model is outputting something because it knows that it's true or if it's being forced to make something up in order to output more tokens.

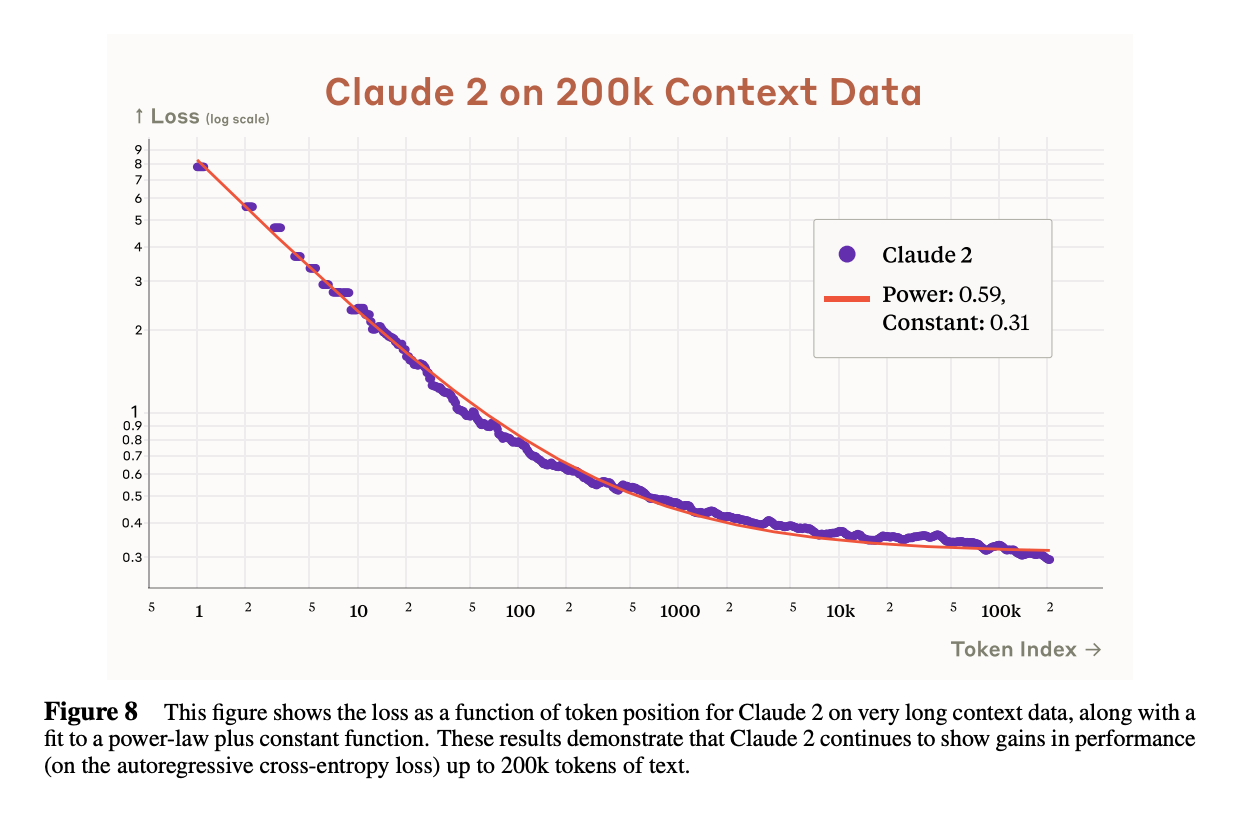

Recently, researchers have begun calling this behavior "confabulation" instead of hallucination. Yann Lecunn's tweet thread is informative, transformer models are fundamentally autoregressive and are just maximizing likelihood; if your first few tokens are bad then the rest are bad too. The chart above shows this: most mispredictions in models are in the first few tokens - after that, models do well in minimizing loss. The 'useful misprediction,' then, is in the first few tokens and after that, the model has a good sense of how to flesh out what it's doing. If the first few tokens are right (again, usually a 'misprediction') then the results are useful. If the first few tokens are wrong, well, it's going to try and find plausible tokens for the rest, but it's wrong in the first place.

Sasha Rush recently gave an example: say you ask a model, 'What county is Manchester in in England?' - along with some context from Wikipedia. The model will probably output the right result, but how do you know if the model is doing it based on knowledge it had in its weights, versus information that you provided in the context window? It's not necessarily bad that if the model is doing it with its own information - you'd consider that 'smart' for a human. What if the model has absolutely no basis for that prediction, that it didn't find anything in the context and it didn't really have the information in its weights, but had to output a guess? If the guess was right, does that mean the model 'knew' something?

What we see is that models often output 'correct' or 'true' results, and in most task-based instructions, people look for just accuracy. Of course, merely having 'true belief' is not knowledge. Typically, people say you must also have justified belief for knowledge - essentially 'show your work' or the plot of Slumdog Millionaire. In AI models, 'think before you speak' or Chain-of-Thought prompting (CoT) is close to asking models for justification. In general, we do see better results from CoT, but can we say that justified true beliefs are knowledge?

No! AI outputs are a particular form of the Gettier problem, a thought experiment which concludes: even if a statement is true and justified, that does not constitute knowledge. Here's a transcript of ChatGPT creating a few such cases. (notably I don't think #2 is actually a Gettier problem!)

Say that you are standing on a farm with rolling hills, and off in the distance, you see a cow, and say "I see a cow." Say that there is a cow out there - you would have justified true belief. However, this is not necessarily knowledge. Say that you actually do not see a cow, but rather see a cardboard cutout of a cow. There really is a cow behind that cutout, but you don't see the cow at all. This is true justified belief, but not knowledge. I think that this is the right way to think about LLM outputs too - all of the work is pushing towards giving results which are true and justified but not necessarily knowledge. To be fair, it's unclear how we can define knowledge for humans, let alone for an alien machine.

The future

Recall the autoregressive problem - if the first few tokens of a response are bad then the rest of the response is also probably going to be bad. You can also call this mode collapse, putting bad data into the prompt is very bad (and a reason why large context window is not a free lunch).

One solution is to then make sure the first few tokens are good. You could do this by injecting facts/relevant info into the prompt and ensuring that the model starts off by recapping correct facts (eg by finetuning to generate these responses). Doing this requires access to good facts though!

So - OpenAI is partnering with the Associated Press for facts. DeepMind has published a solid body of work (eg WikiGraphs: A Wikipedia - Knowledge Graph Paired Dataset or Graph schemas as abstractions for transfer learning, inference, and planning) on integrating LLM's with knowledge graphs. And - shocker - DeepMind is part of Google, which has only the world's best knowledge graph.

I believe that Bard has at least rudimentary integration with Google's knowledge graph now. Bard's underlying model doesn't seem on par with GPT4 (or even GPT3) in terms of reasoning, but by virtue of having tons of relevant up to date information, it's still usable as an assistant for lots of tasks. Google's next generation Gemini model supposedly incorporates techniques from AlphaGo - that's incredibly vague, but my guess is that that includes the tree-search approach. AlphaGo used tree search to explore the potential move space and decide which move was the best - Gemini could use a similar approach to 'walk' the knowledge graph and decide which facts to inject to the prompt first. There's a lot of different ways in which LLM's and knowledge graphs can be tied together, this paper is a nice survey of many different methods, I frankly do not have a very strong view which of these approaches will succeed.

The other approach here is to just make the model itself much better and more likely to give the right response. OpenAI is taking this approach when it pays industry experts (eg coders, nurses, etc) to RLHF its model or write exclusive responses. This is exponentially expensive - the better you are in your field, the more expensive your time is. The more complex or advanced a particular problem is, the longer it takes to evaluate if an answer is right or to write an answer in the first place. I think there's good evidence that this approach can work - but oh my god, it's expensive.

In general I think both approaches are likely to work well and that the model provider companies will work on both approaches in parallel. Both of these present challenges to the open source ecosystem to replicate, most notably the knowledge graph approach. It’s probably possible to distill the info from the expert finetuned models, but if a knowledge graph is tightly integrated with the LLM, it’s likely to be difficult to work with.

I think the knowledge graph approach makes a lot of sense and will likely work very well. My worry is that there's no good open source equivalent of Google's knowledge graph here. The best open source is Wikipedia, which is great for research purposes, but will not match any closed source model. Collecting up to date information is a tricky problem to incentivize. Google Maps is probably one of the best local data sources, I would not be surprised if people were also scraping Yelp to get equivalent datasets.

A leading researcher counterargued that using local models will be cheaper/better for knowledge graph integrated models, because each query is 'free' and models may want to make many queries over the graph. I think that maybe we didn't understand each other - I don't think consumers will even have a local knowledge graph to query over. I think there is a VC scale business here that provides an open data layer (pay-per-query). Like most of my ideas: 3-6 months too early :)

I think the dream is to separate reasoning from facts in the model - imagine a very very smart model that just operates on different corpuses of facts. From the enterprise perspective, imagine that GPT-X runs solely in your cloud and has access only to your own data; while other companies might run the same GPT-X, it operates on a different proprietary set of data for each company. Because the model doesn’t need to store facts, it can be much smaller/sparser and fit on-device too. Similarly, imagine Apple devices with an on-device model that only uses your personal data, never leaving the phone. A very exciting future if we can pull it off!